Three years on

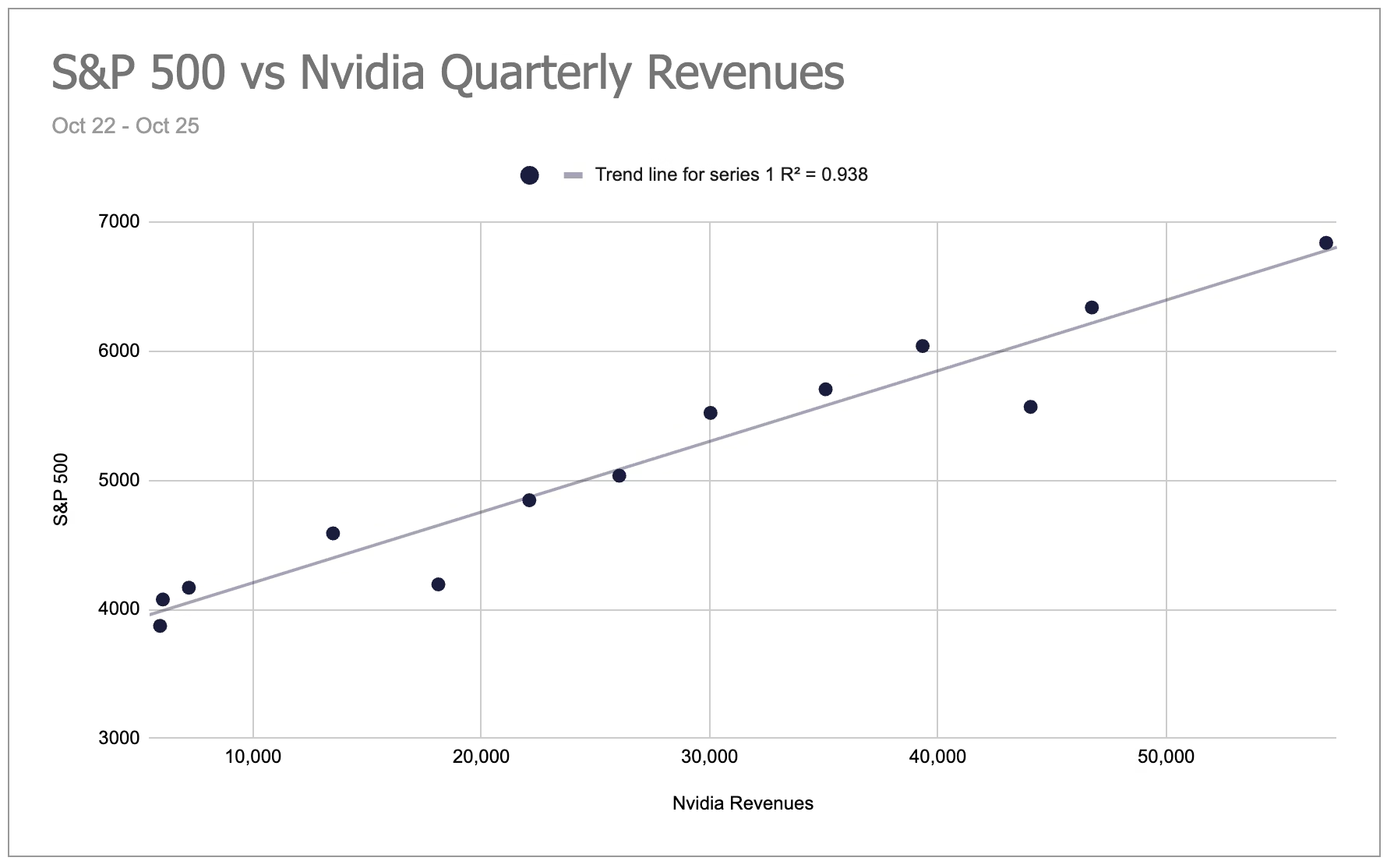

Three years ago, Sam Altman posted a tweet announcing the launch of ChatGPT. It is easy to forget how recent that was. The shift in sentiment across markets, technology and business since December 2022 was rapid. AI optimism is the main explanation for the turnaround in the S&P500 since then.

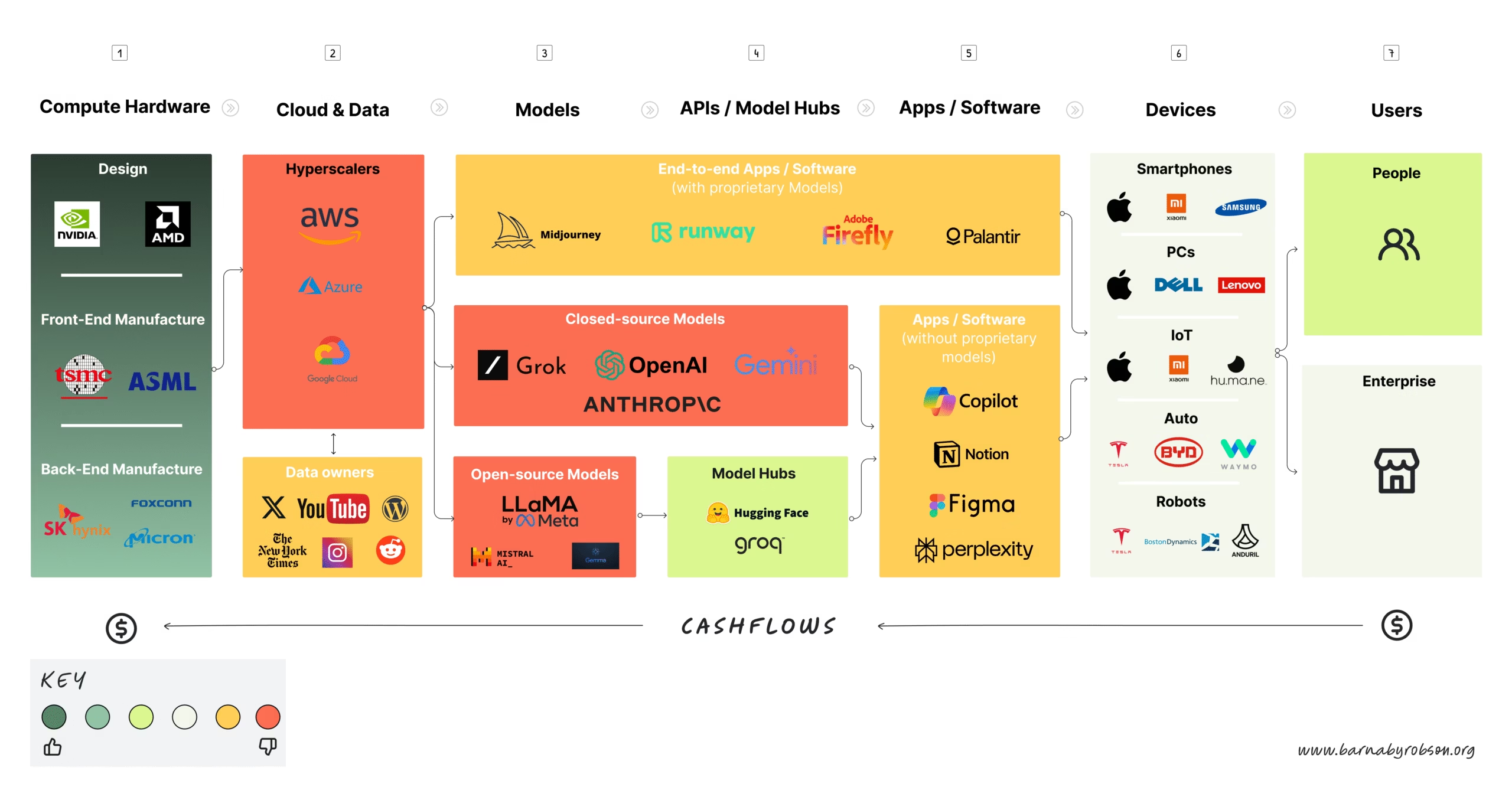

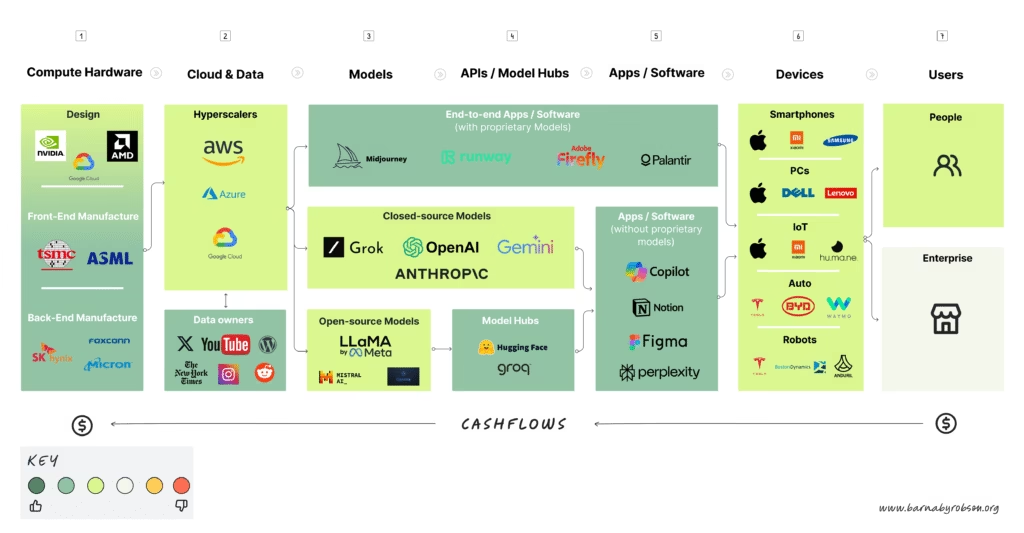

For most of 2023–25, the story was straightforward. Nvidia supplied the chips. The hyperscalers bought them. Everything else in the AI stack sat downstream of that relationship. If Nvidia grew, markets assumed AI adoption was moving in the right direction.

A single statistic summarises this well. The correlation (R²) between S&P 500 levels and Nvidia’s quarterly revenues from October 2022 to October 2025 is roughly 94 percent. Almost the entire movement in the index over this period can be explained by Nvidia’s revenue cycle.

Yet, growing concerns around the level of value capture by Nvidia has led most investors and operators to question:

- Where else will value accrue in the AI stack?

- Will the hyperscalers ever earn a return on their AI compute spending?

These questions mattered because the answers influenced expectations for productivity, earnings and general demand across the economy.

As a result, markets became highly sensitive to four indicators:

- Chip sales: Nvidia (and TSMC) revenue forecasts as a proxy for demand

- CAPEX forecasts: infrastructure spending plans by the hyperscalers

- Downstream productivity: any evidence from studies on efficiency gains

- Labour data: signals of whether AI was augmenting or displacing roles

A shift in the hardware narrative

Over the last month, the assumptions underpinning this period have moved.

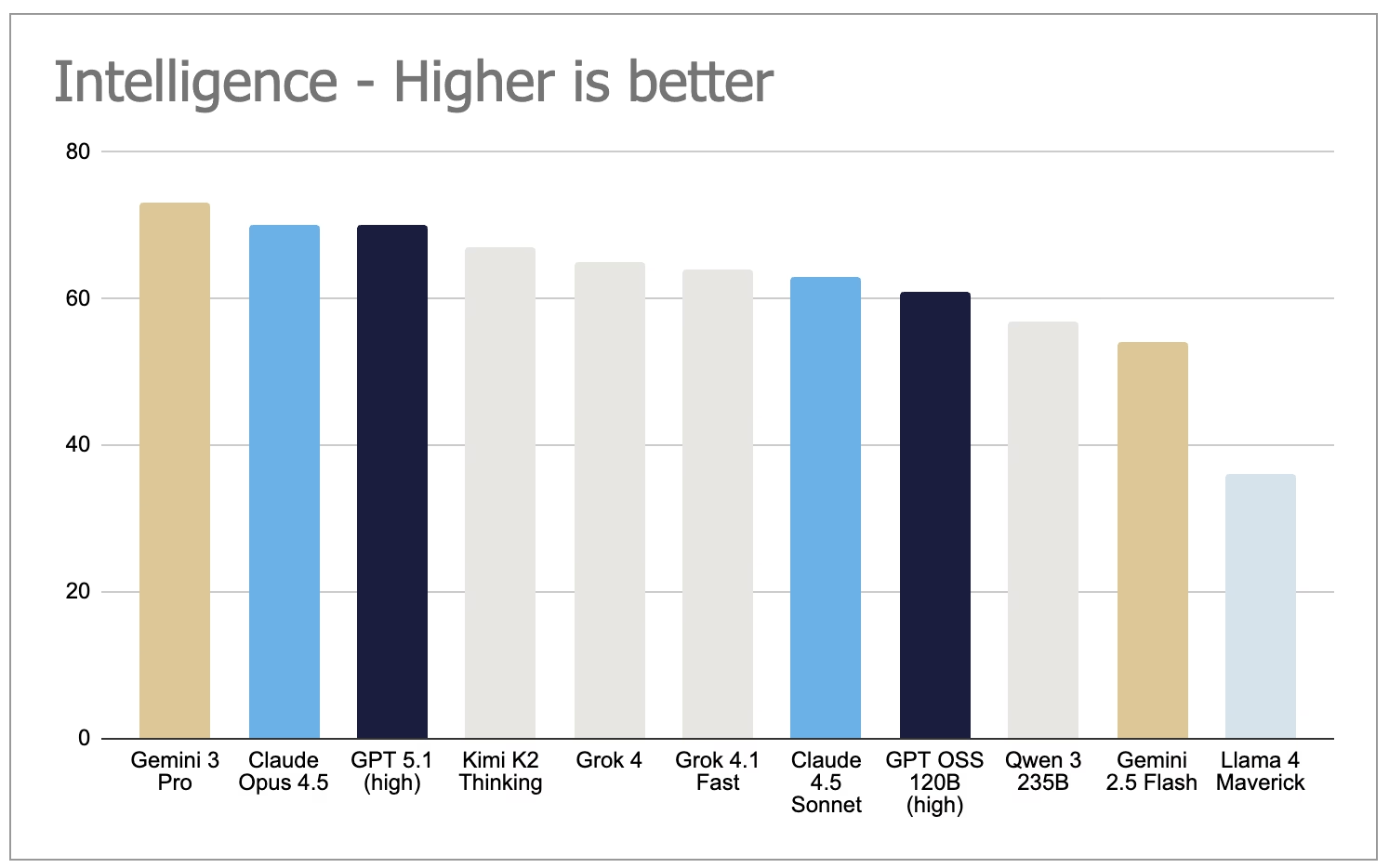

Google’s release of Gemini 3 showed that a state-of-the-art model, strong on agentic tasks, could be trained entirely on TPUs rather than Nvidia GPUs. This was the first clear demonstration that top-tier performance did not require Nvidia hardware.

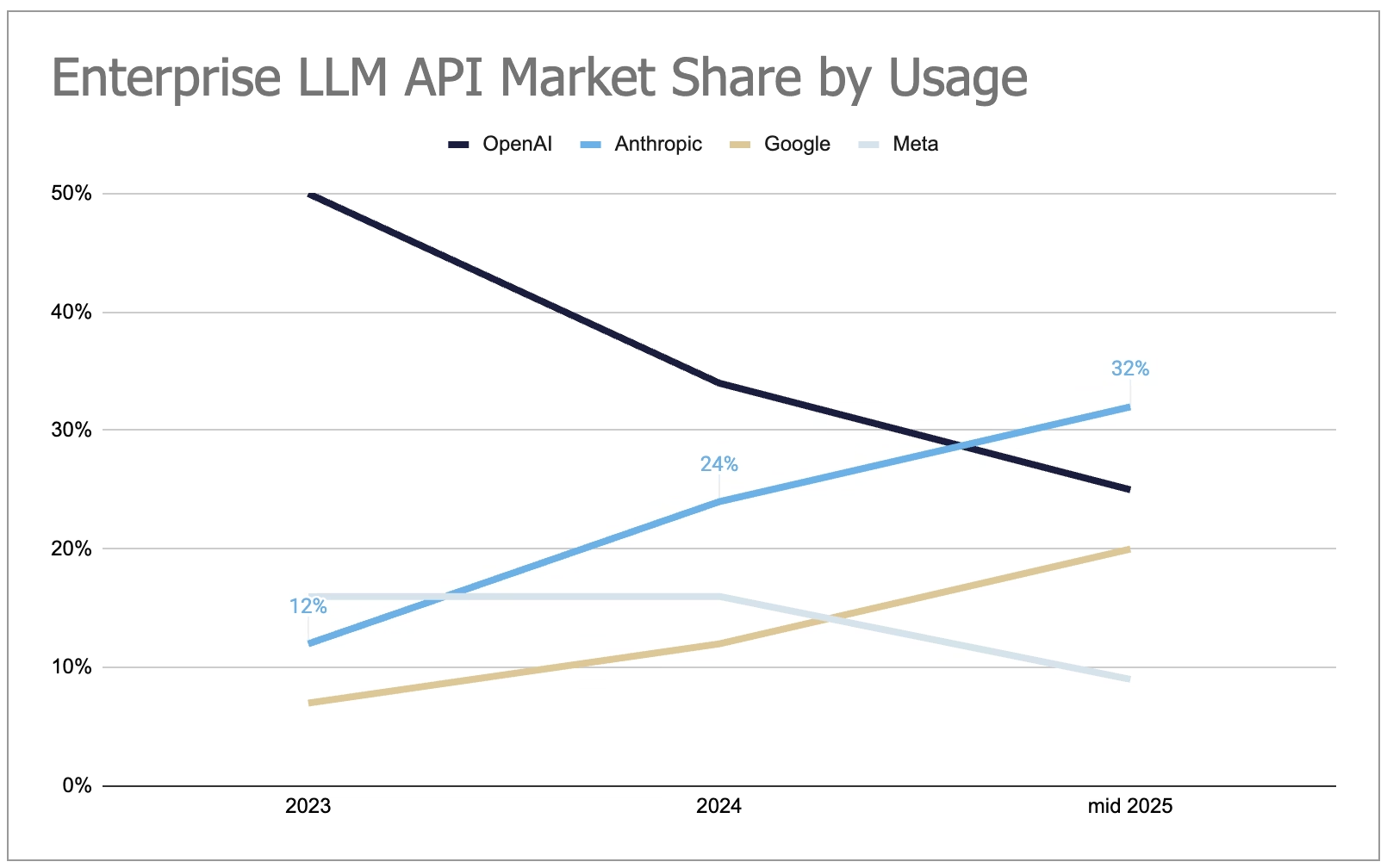

Shortly afterwards, Google signed a >1GW agreement with Anthropic. This matters because it takes TPUs from an internal Google asset to something closer to a merchant silicon option. Anthropic’s Claude models are now the leading enterprise LLMs, and their training and inference choices influence where corporate workloads go.

Enterprise market-share estimates shows this clearly.

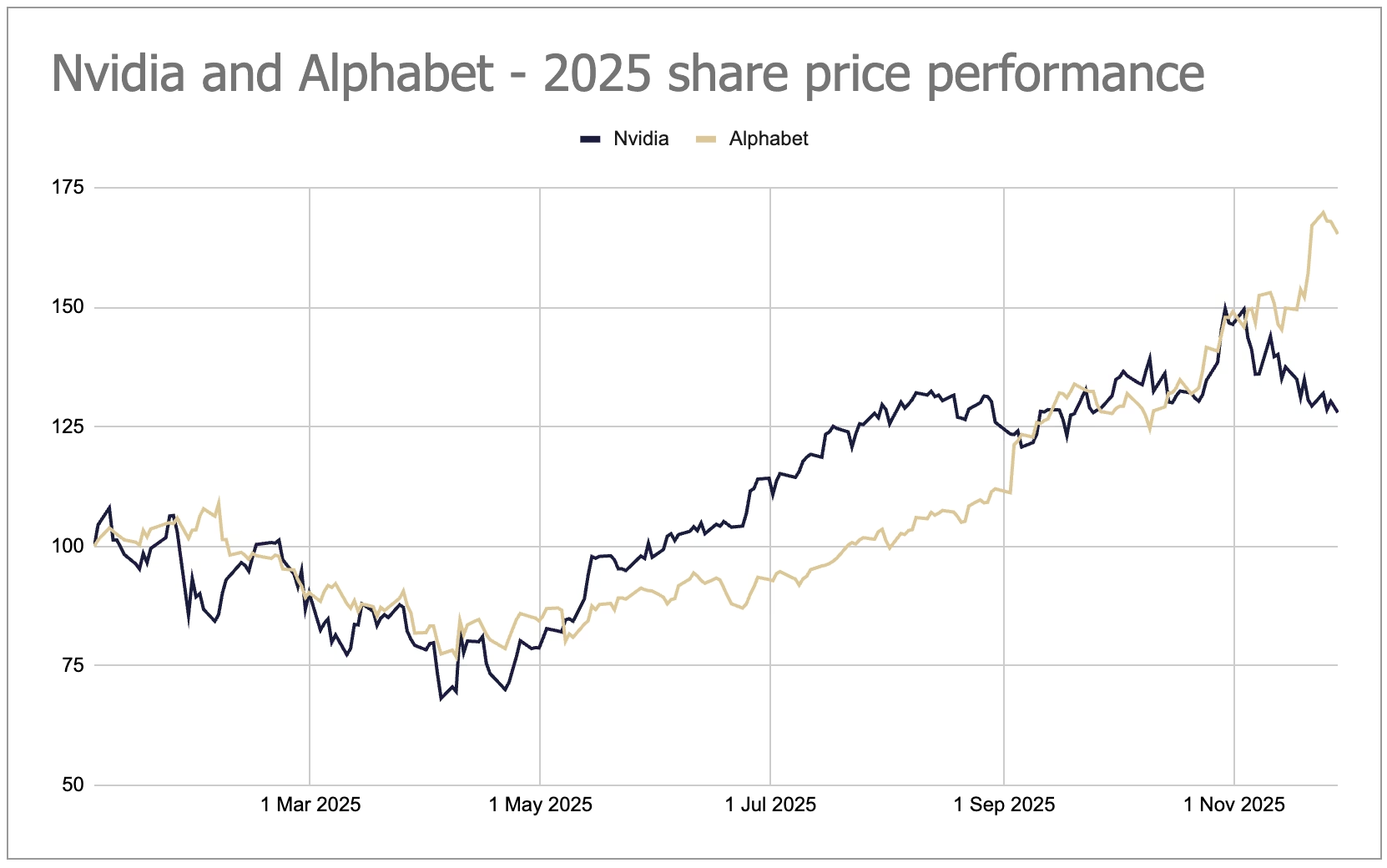

Together, Google and Anthropic now form a second large-scale compute ecosystem. The first remains the Nvidia–Microsoft–OpenAI complex. The AI sector has moved from a single dominant supply chain to two credible routes.

Markets have already reflected this shift: since 1 November 2025, Google has traded up; Nvidia has traded down.

How this changes the economics

The Return on Compute Denominator has changed

At the datacentre, model and application layer, the barrier to AI profitability has been high infrastructure costs — the “Nvidia Tax”. Early data suggests that Google’s TPU v7 (Ironwood) offers roughly 44 percent lower total cost of ownership per server than Nvidia’s GB200 for sophisticated users.

If the cost of training and running models drops by anything close to half, the denominator in the return-on-invested-capital equation shrinks. More workloads become economically viable, and the time to breakeven shortens.

Broader based value accrual

Google’s strategic motive is clear: protect search and strengthen its cloud position. Reducing chip costs shifts value downstream to where Google is stronger — applications, APIs, data and workflow integration — rather than keeping it concentrated upstream in high-margin silicon.

Historically, value accrual in AI has looked something like this:

A more plausible long-term view looks closer to this:

Lower infrastructure costs broaden the set of feasible AI products, reduce dependence on a single supplier and support wider adoption across industries.

Conclusion

While presently Nvidia chips are fully sold out, it’s likely in the next year or so ‘the Nvidia Tax’ will drop. If state-of-the-art results (e.g., Gemini 3) can be achieved on hardware that costs significantly less to operate, Nvidia’s margins will compress and the path to profitable AI deployment becomes shorter for the broader market.

Disclaimers

The views expressed here are my own and do not represent the position of any employer or organisation. This essay is for general discussion only and should not be taken as investment advice or a recommendation to buy or sell any security.

Sources and further reading

- SemiAnalysis – analysis of TPU economics, Nvidia supply dynamics and hyperscaler strategy

https://www.semianalysis.com - Artificial Analysis – benchmark comparisons and model capability data

https://artificialanalysis.ai - Menlo Ventures – enterprise LLM market-share estimates

https://menlovc.com - Google Cloud – TPU v5 and v7 technical information

https://cloud.google.com/tpu - Nvidia – datacentre product releases and fiscal results

https://www.nvidia.com - Anthropic – announcment re use of Google TPUs

https://www.anthropic.com/news/expanding-our-use-of-google-cloud-tpus-and-services