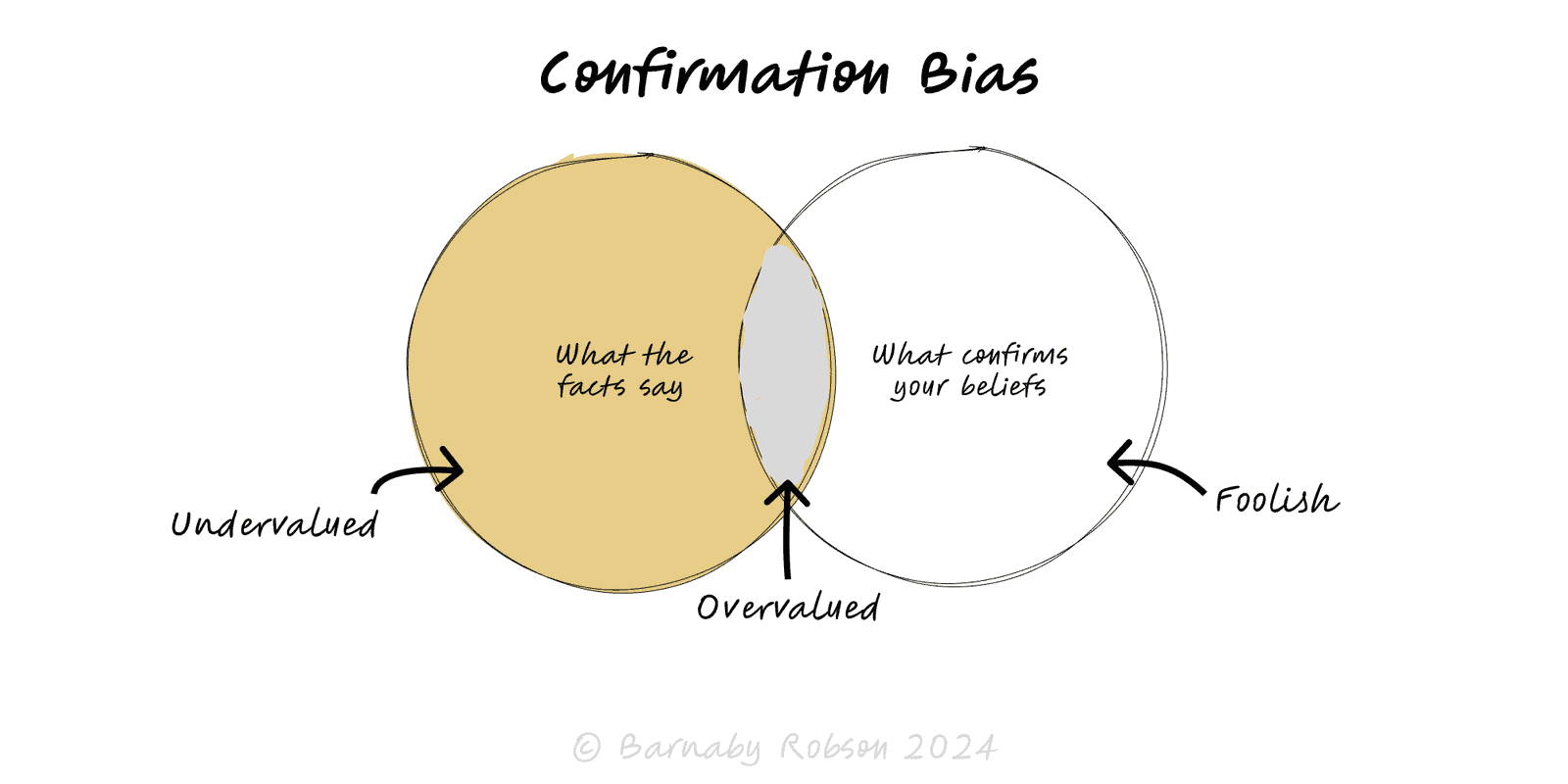

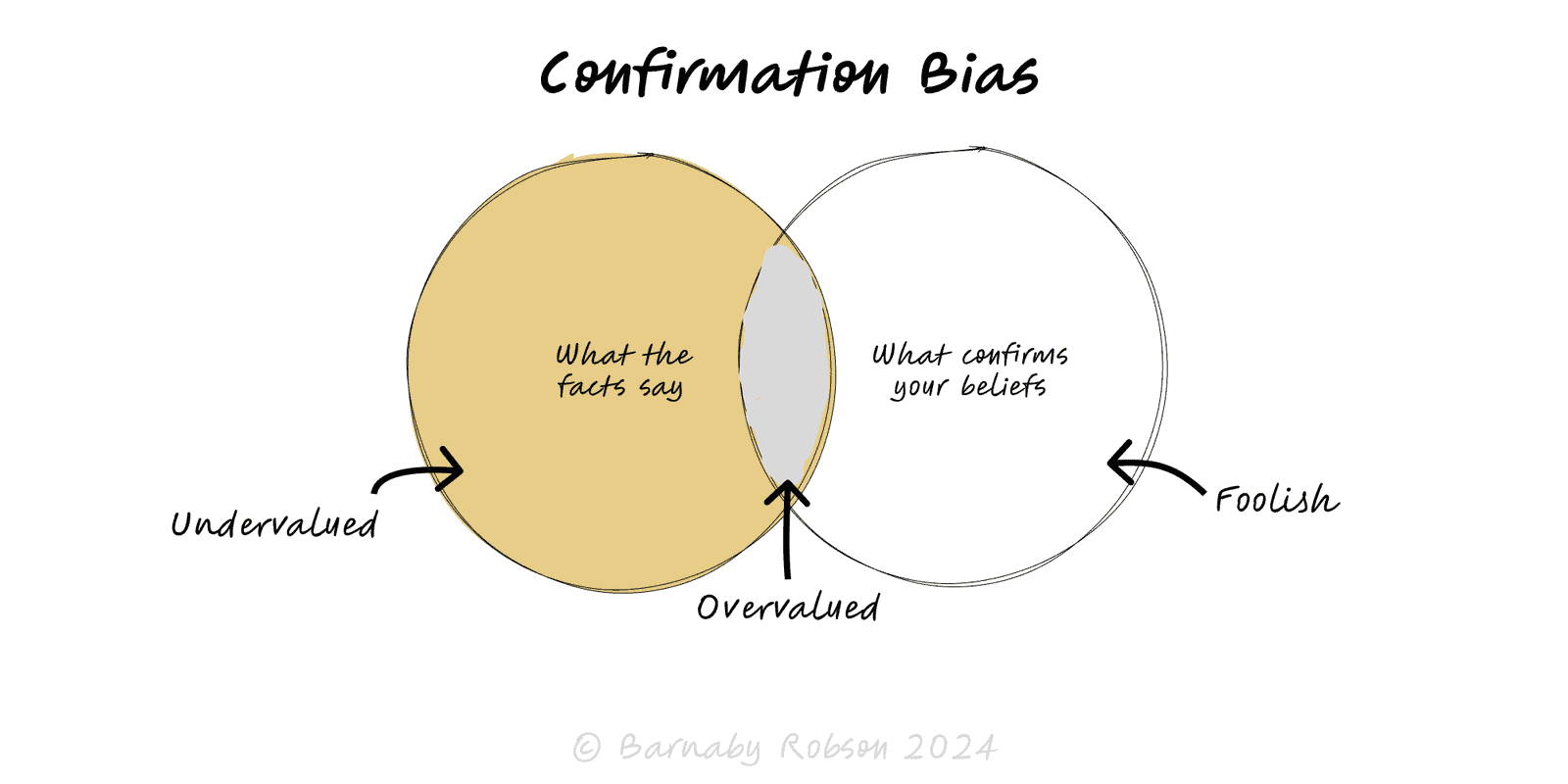

Confirmation Bias

Confirmation Bias is a practical lens to frame decisions and reduce error.

Author

Daniel Kahneman & Amos Tversky; broader cognitive psychology

Model type

Confirmation Bias is a practical lens to frame decisions and reduce error.

Daniel Kahneman & Amos Tversky; broader cognitive psychology

Confirmation bias is a family of tendencies that make us favour supportive evidence and downplay disconfirming facts. It shows up in what we look for (selective exposure), how we read (biased interpretation), and what we recall (memory bias). Because it feels like good reasoning from the inside, it reliably derails analysis, research, hiring and strategy unless the process is designed to counter it.

Search bias – we look where agreement is likely (friendly sources, aligned stakeholders).

Interpretation bias – ambiguous data is read as supportive; inconvenient data is nit-picked.

Memory bias – we recall confirming examples and forget counter-examples.

Hypothesis lock-in – early narratives anchor subsequent evidence gathering.

Identity & incentives – ego, status, and KPIs make backtracking costly, deepening the bias.

Algorithms amplify – feeds surface similar views, reinforcing selective exposure.

Decision reviews – strategy, M&A, pricing, vendor selection.

Research & analytics – survey wording, A/B test reads, significance hunting.

Hiring & performance – first impressions drive later evaluation and feedback.

Risk & compliance – “it hasn’t failed yet” used to dismiss latent risks.

Product discovery – customer quotes cherry-picked to fit a pet idea.

How to avoid

Write the decision & base rate – state the objective, options, criteria, and a reference-class outcome upfront.

Independent estimates first – collect forecasts/scores privately before any discussion (prevents anchoring and herding).

Pre-register tests – define success/fail thresholds, sample sizes and analysis plans before seeing data.

Consider the opposite – appoint a red team to build the strongest counter-case; log what would change your mind.

Blind or label-hide where possible – anonymise candidates, remove treatment labels, or use hold-outs.

Evidence matrix – list for/against/unknown for each option with linked sources; no facts without a link.

Force disconfirming searches – require at least two high-quality sources that challenge the favoured option.

Sequential logging – timestamp evidence as discovered to avoid back-fitting stories (no HARKing).

Calibration & scoring – record probability ranges; track Brier scores and adjust future confidence.

Incentives – reward error-correction and clean kills, not just “wins”.

Checklist theatre – rituals without teeth; tie gates to go/no-go decisions.

False balance – giving low-quality rebuttals equal weight; keep standards for evidence quality.

Analysis paralysis – overcorrecting by never deciding; set decision windows and stop rules.

Narrative pride – protecting the story over the outcome; normalise reversals when evidence shifts.

Metric misalignment – KPIs that punish reversals or reward volume over validity entrench bias.

Click below to learn other mental models

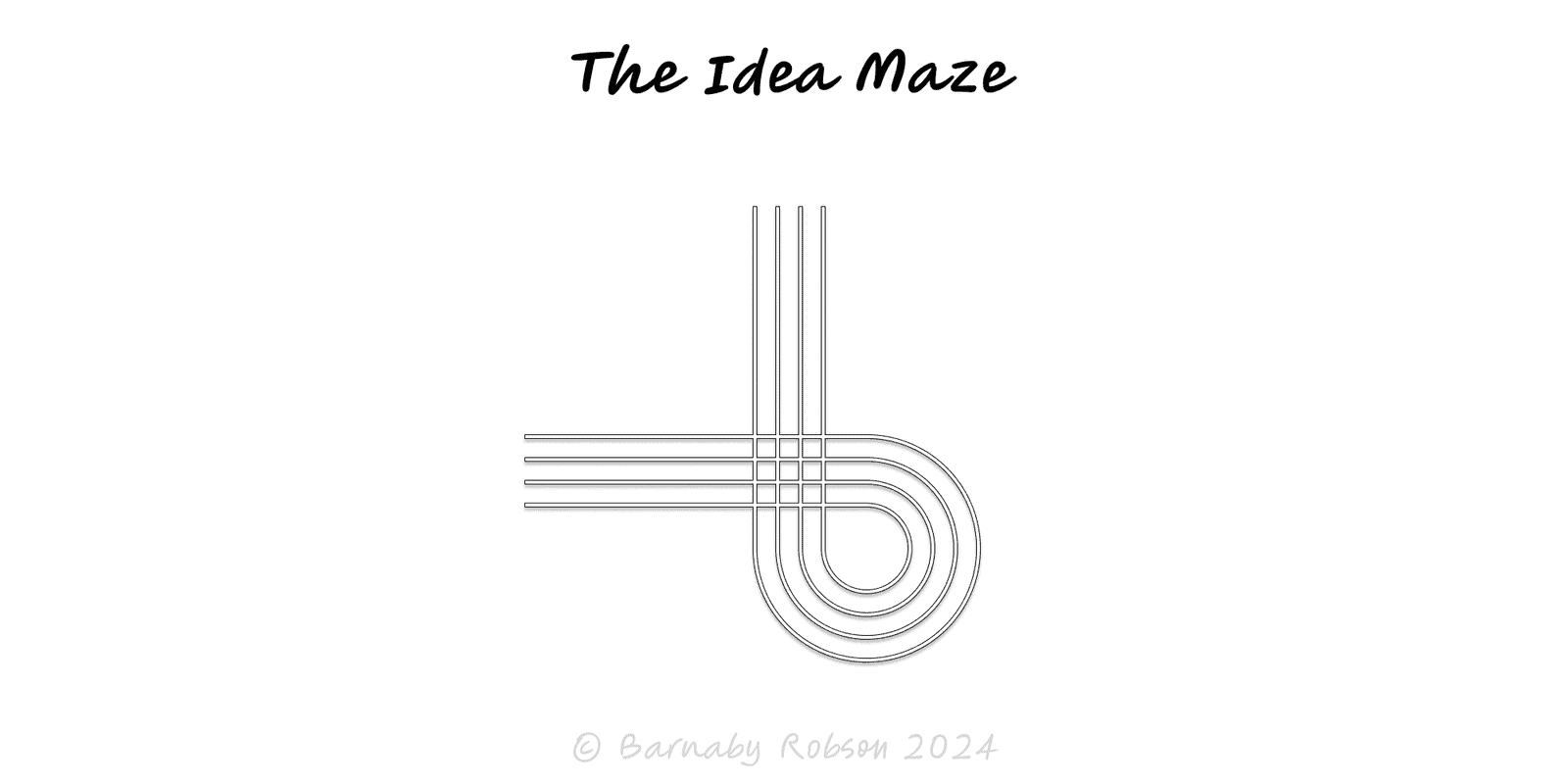

Before building, map the space: the key forks, dead ends and dependencies—so you can choose a promising path and run smarter tests.

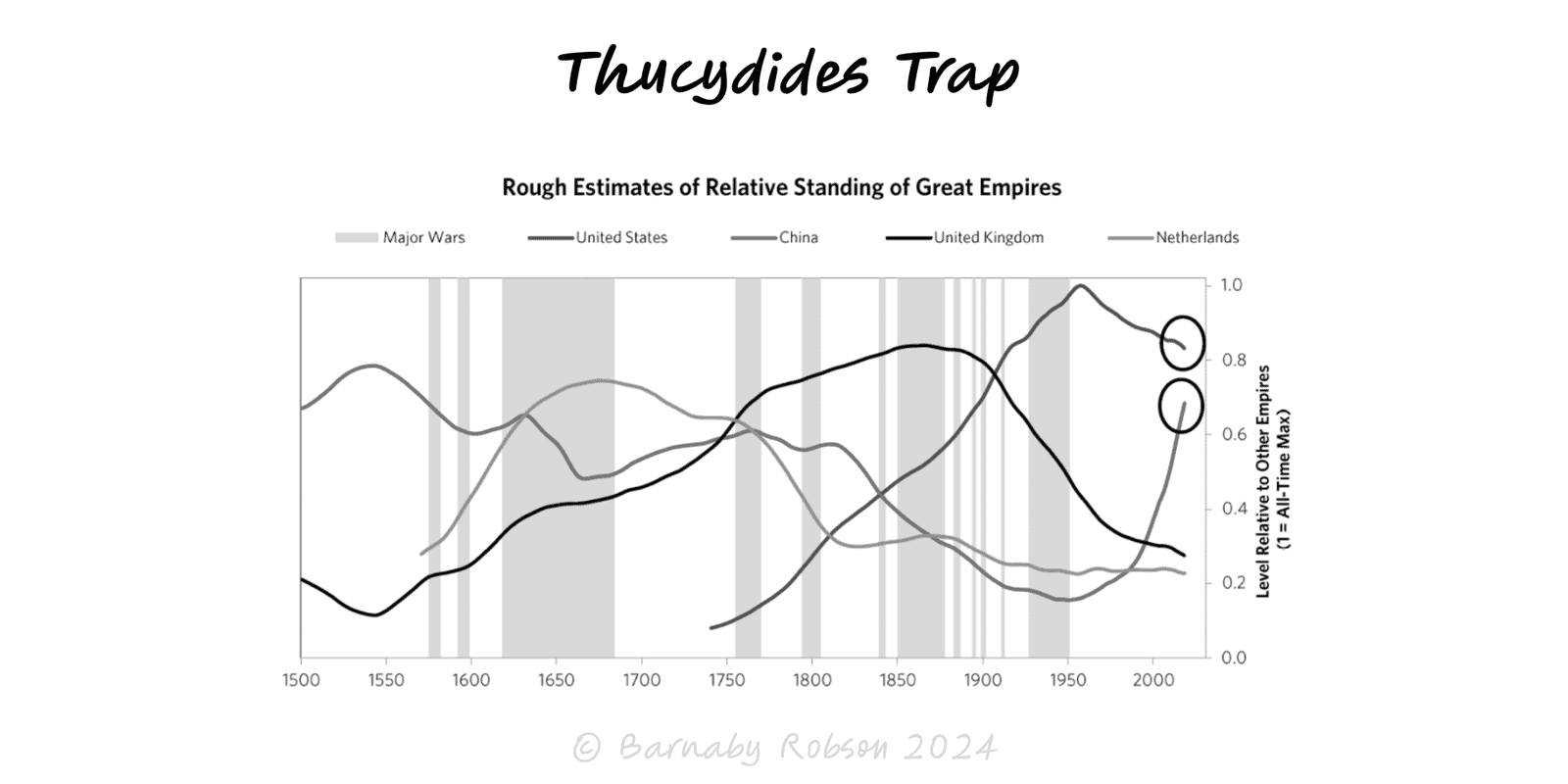

When a rising power threatens to displace a ruling power, fear and miscalculation can tip competition into conflict unless incentives and guardrails are redesigned.

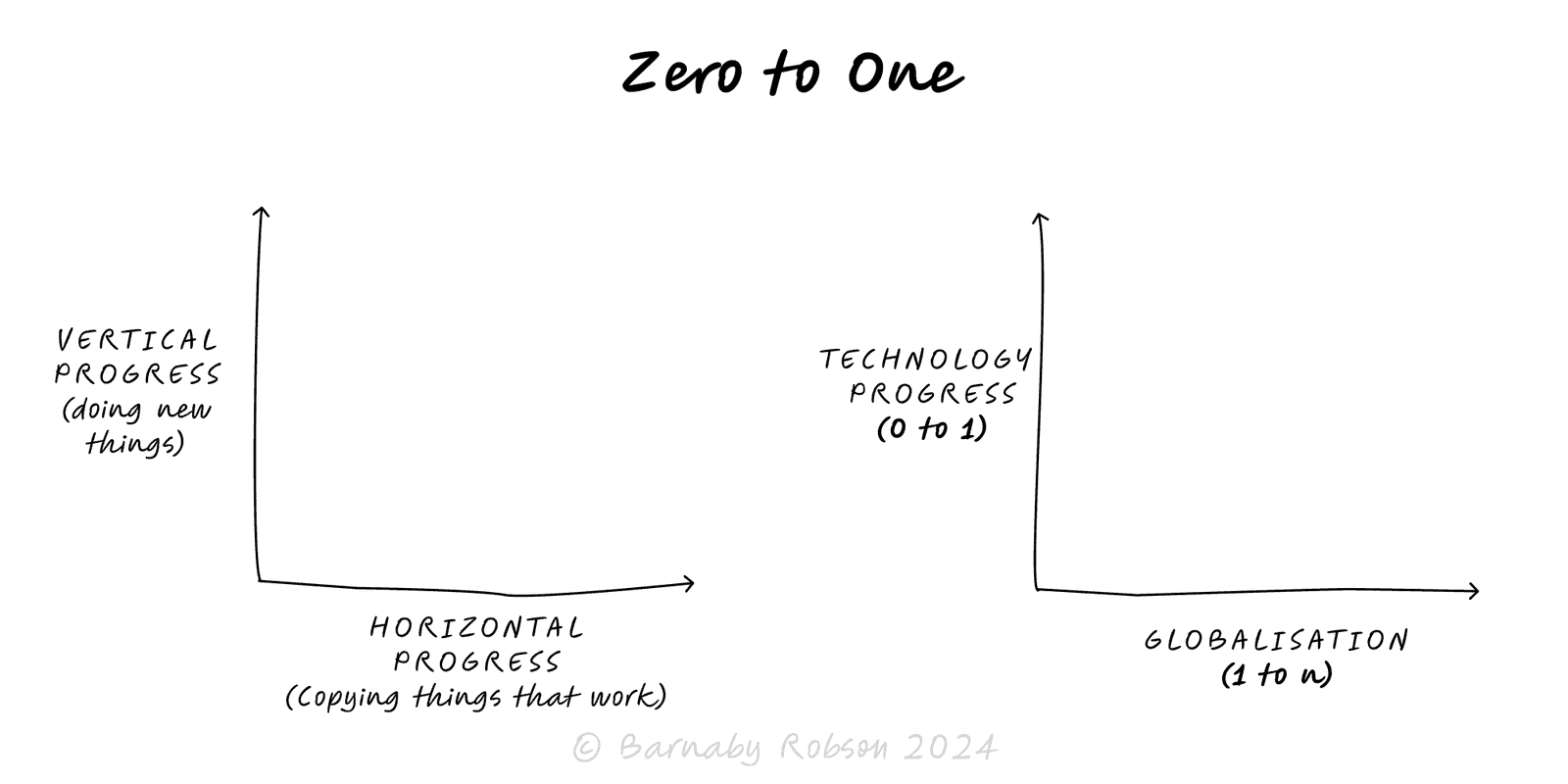

Aim for vertical progress—create something truly new (0 → 1), not just more of the same (1 → n). Win by building a monopoly on a focused niche and compounding from there.